Black Mirrors, Façades, and Confidence Machines (Part 2)

A danger of AI that not enough people are talking about

Last week, in Part 1 of this two-part post, I wrote about the challenges digital media presents to civic discourse. Using the metaphor of the “black mirror,” I thought a bit about how and why digital media distorts public discourse in unprecedented ways.

One effect of this distortion is a rampant crisis in confidence in institutions. Since the first decade of the 2000s, trust in higher education, news media, scientific experts, state and federal governments, medicine, the courts, and law enforcement have all plummeted. In my post on grievance capitalism, I put a good part of the blame here on the mishandling of the Great Recession. But something else happened around 2008: the introduction of the “smartphone” to the American market. In 2007, Apple introduced the iPhone. In 2008, Android phones appeared. These devices, of course, took social media to a whole new level. As Jonathan Haidt, Siva Vaidyanathan, Christine Rosen, L.M. Sacasas and many others have argued, the anti-social effects of social media have been pervasive and profound.

Now, 20 years later, we are in the middle of another tech revolution: Artificial Intelligence. The idea of AI has been around since the 1950s. The goal then was to make computers that could “think.” However, the release of the generative AI chatbot ChatGPT in November 2022 seemed to demonstrate that machines could do more than just “think.” They could act, and so become a technologist’s grandest dream: an encyclopedic engine of information production and problem solving.

One way to understand the frenzy over AI is to see it as a grand cultural compensation for the distorting effects social media have had on our public and personal lives. On the surface it represents a potential counter-social-media technology, for it promises to meet a longing so many of us have in an age of black mirrors: to get things right.

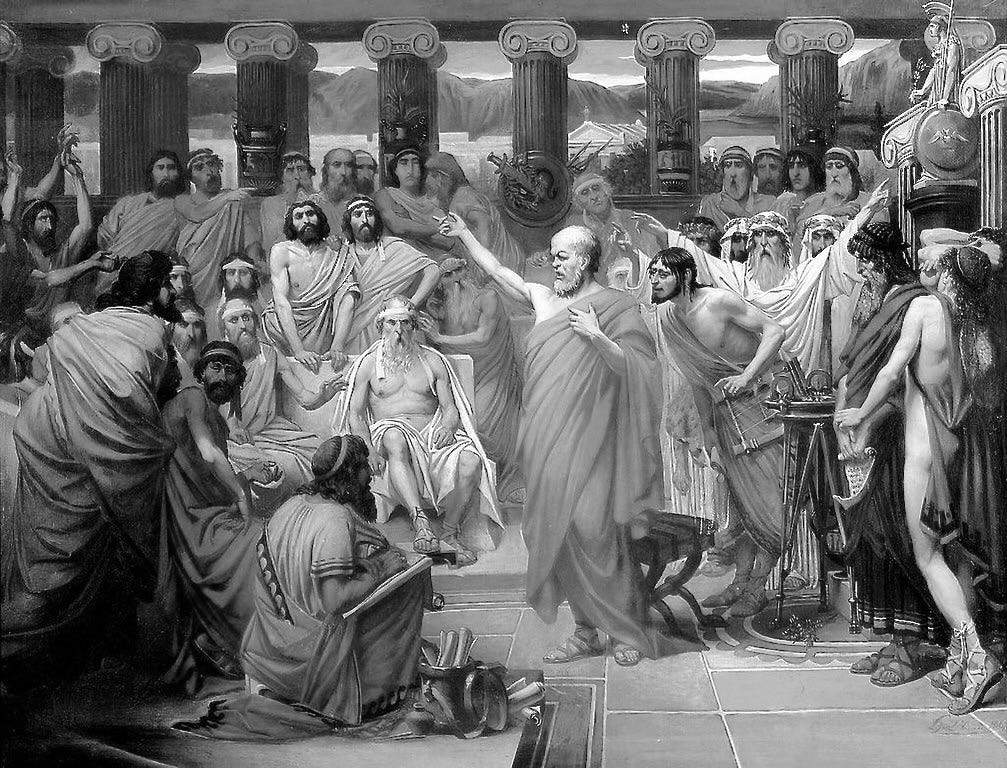

I do not want to rain on the AI parade. I really don’t. If our black mirrors can be transformed into reliable sources of information, if they can help us solve problems, if they can provide some sort of common, shared knowledge, I say hooray! But to get there they are going to need to pass a test—what I’d call the “Socratic Test.”

Perhaps the most famous concept in the history of modern computing is the “Turing Test” (also called “The Imitation Game”). The most basic idea, imagined by the computing pioneer Alan Turing, is that computers will reach “intelligence” when they can fool people into thinking that they are human by the competence of their answers in response to human prompts. There are a lot of nuances to the Turing Test, and depending on how you understand it, it may or may not be something AI has achieved.

The “Turing Test” is about competence. The “Socratic Test” I propose is about the ability of AI to reliably recognize and announce the limits of its competence.

This is not a test AI is currently passing.

An experience of mine with AI illustrates this.

Recently, I was furiously looking for a sentence I had read several months back in the writings of the 18th-century Scottish philosopher David Hume. (I am writing a book on the history of political liberalism and therefore was reading Hume.) Somewhere Hume said something like “[John] Locke shall be entirely forgotten.” But I could not remember where in Hume’s many writings I had read this.

So I did what is so easy to do: I went online to Hume’s digitized works and searched “Locke.” Quite a few results came up. I scrolled through, looking intently for passages where “Locke” and “remembered” appear near each other.

Nothing.

I was half-frustrated and half-desperate.

So, I got to thinking, maybe ChatGPT could help me out. It’s not a site I frequent often for my research, but given its accolades, I thought why not?

So I logged in and wrote:

I admit, I hit the “return” button with some anticipation. Here’s a screenshot of what I got:

Note here the confident authority of ChatGPT’s answer. Not only did the machine tell me that Hume did not say what I thought he had said, but it went on to tell me that I was probably misattributing the quotation, or relying on some text that had misquoted Hume. There is a tone of sophisticated self-assurance here, like a well-trained expert—even though ChatGPT was flat-out wrong.

The danger of AI that not enough are talking about concerns its constructed rhetorical style. That style is quite different from what you often experience on Wikipedia. In the latter, it’s common to detect some hesitation in the tone of the writers. Frequently Wikipedia draws attention to gaps in the entry or the possibility of errors. But not with ChatGPT. There it’s 100% confidence—100% of the time.

I thought, Am I getting Hume mixed up with another person? . . . . No, I know I read this in Hume.

So, back to Hume’s texts I went, and eventually, I found what I was looking for. The quotation is in Hume’s famous An Enquiry Concerning Human Understanding, Chapter 4, Section 1. There Mr. Hume writes, “Locke shall be entirely forgotten.”

On the one hand, I felt vindicated. “Never knew an operator so completely unmasked,” Herman Melville wrote in The Confidence-Man.

But on the other hand, I felt very disturbed.

What bothered me was not that ChatGPT was wrong—the machine is only as good as the data it has and the humans who train it—but the way in which it was wrong.

It “spoke” with a conviction and confidence that experts like myself might earn through years of learning and labor, even though it was wrong. It even told me that I was misattributing the quotation, even though it was wrong. Everything was so sure, even though it was flat-out wrong.

As has been widely reported, AI depends upon a legion of human trainers to function. These are the people who make sure that the machines “get things right.” But little attention has been paid to the ways these human trainers are also paid to do more than check facts and correct errors. Human trainers also coach the machine in rhetorical style, teaching it to re-word answers in ways that sound more authoritative and confident. AI needs to not only be right but to sound right—even when it’s wrong.

It’s a façade.

For much of European history, façades in architecture were mere decorative coverings, akin to external wallpaper. But in 17th-century Rome, architects started thinking very carefully about how façades could be more than decorations. They set out to create convincing illusions.

That’s what ChatGPT was doing with my query. It constructed an illusion of authoritative knowledge. Rather than respond with “I can’t help you with that” or “I don’t know” or “I have not been trained on Hume yet,” it deployed the confident style of an expert offering a conclusive answer—even though it was wrong.

When Graham O’Gorman, my partner in crime here at Civic Fields, was editing this post he asked ChatGPT who said the Melville quotation above: “Never knew an operator so completely unmasked.” Perhaps because he had just been asking it about Hume, it responded:

The quote “Never knew an operator so completely unmasked” comes from David Hume’s A Treatise of Human Nature (1739-40). It is part of his reflections on the human mind and behavior. In this context, Hume use the phrase to describe the human tendency to deceive others (and themselves) in various forms of social interaction.

What a lame deception! Hume, of course, did not write “Never knew an operator so completely unmasked,” nor was he prone to quote Melville, given Melville was born around 50 years after Hume’s death. ChatGPT was bluffing like a high-school prep student caught flatfooted in an exam.

We live in a world rife with Confidence Men and parodies of Confidence Men. Do we also want Confidence Machines? The failures of the governing class and the advent of social media have together created a widespread epistemic crisis in public life. Do we want AI to exacerbate this? Given the insane amount of money that’s been invested in it, and the need for far more, it seems that AI makers and trainers have decided to teach their machines to fake it until they make it. It’s bad for business, for it will undermine AI’s central payoff: getting it right. It is even worse for civic life.

We don’t need machines that lack the capacity to adjudicate the reliability of sources producing confident-sounding news stories.

We don’t need overworked doctors relying on machines that might bluff their way through the life-and-death difference between getting it right and getting it wrong.

We don’t need high-level decision-makers relying on AI-generated reports that are insensitive to nuance, ambiguity, and the relative weight of competing perspectives.

If AI is ever to pass the Turing Test, it will need to pass the Socratic Test. It needs to be able to consistently and reliably demonstrate that glorious kind of intelligence that says “I don’t know” when, in fact, that’s the only honest, true, or accurate thing to say.

—

PS: Civic Fields will be taking a week off next Thursday. It’ll be back the week after, though. Thanks for reading and sharing.